Quick Summary: Can AI Create a Life? The Truth About Digital Biology

- Content Type: Research-Based Guide

- Last Updated: August 12, 2025

- Affiliate Link Present: No

Can AI create a life? People have spent billions of dollars and countless hours chasing this idea even though success isn’t certain. The natural biosphere on Earth is the result of billions of years of evolution that led to today’s biodiversity. Meanwhile, our efforts to recreate this process with technology have started. Life on Earth first appeared around 3.8 billion years ago, and scientists are still trying to figure out the core principles that could let us build artificial life.

Artificial life goes far beyond basic cellular automata driven by math-based rules. It includes bolder ideas like robots that assemble themselves and synthetic genomes placed into living bacteria. Technology keeps advancing side by side with biology. For example, AI now makes it seem like loved ones who have passed away can interact with their families. This article will take a look at the science behind digital biology, question whether creating life can happen without a full understanding of it, and dive into whether AI could ever possess life. In short, can AI create a life?

The Struggle to Define Life in Science

Explaining what life means is one of biology’s biggest challenges. Scientists have offered many definitions, but none has gained worldwide agreement. This key question shapes all studies aimed to create artificial life and decides if we can ever say we made something alive.

The N=1 Problem in Biology

Biology deals with a distinct challenge because we base everything we know about life on just one example. Scientists call this the “N=1 problem,” and it restricts how much we can generalize about what life is. Researchers in molecular biology found that all life on Earth traces back to a last universal common ancestor (LUCA). This means Earth’s biosphere acts as a single data point. Because of this, we cannot tell which traits are universal across all possible life forms and which ones exist due to Earth’s unique evolutionary history.

The N=1 problem traps scientists in a loop when they try to find alien life or develop artificial life. With no extra examples to work from, it becomes hard to notice life that looks very different from Earth’s usual patterns. On top of that, having just one sample stops us from figuring out which traits are crucial to all forms of life and which ones are just specific to how life evolved here.

Why It’s Hard to Define Life

Researchers have gathered around 123 definitions of life, but none has been accepted. Most modern definitions describe life by its traits instead of explaining what it is. These traits include things like maintaining balance, having cells, using energy, growing, adapting responding to surroundings, and reproducing. However, every definition faces challenges with exceptions and borderline cases.

Viruses show how tricky it can be to define life. They mutate, evolve, and carry genetic material, which are traits we link to living things. But they have no metabolism and can’t reproduce on their own without a host. On the other hand red blood cells play important roles even though they have no genes, and some bacteria die if they are not in a host. Likewise certain fish like the Amazon need males from other species to reproduce since they can’t do it within their own kind. Yet, we still consider them alive without question.

These unusual examples suggest that life is easier to grasp as a group of shared traits than as something defined by strict rules. Philosopher Carol Cleland points out that standard definitions cannot answer the big question in science: “What is life?” When scientists listed traits of living things and asked experts about them, they discovered several overlapping qualities of life instead of a sharp boundary separating living and non-living things.

Can Scientists Make Life Without Knowing It?

Artificial life researchers face a tricky question. Do you need to create something first to understand it, or does understanding have to come before creating it? Physicist Richard Feynman once said: “What I cannot create, I do not understand.” This implies that making artificial life could teach us more than just trying to define it with theories.

Synthetic biology tackles this in a few different ways:

- Bottom-up methods focus on making new parts like tweaked amino acids

- Top-down techniques modify existing organisms using their molecular components

- Digital simulations explore how parts of living systems work

But every method still has big obstacles. Abiogenesis researchers struggle to show how such complex and interconnected systems could form through evolutionary stages. A good example is how a cell copies its own DNA. It needs enzymes made by translating genes in that same DNA, which creates a tricky chicken-and-egg dilemma. On the other hand synthetic biologists find it hard to mix genetic materials from different sources because genetic parts have adapted together in certain ways.

Enough, knowing more about life has made defining it harder, not easier. As scientists learn more, the once-clear line between what’s alive and what’s not becomes harder to see. This makes artificial life seem both more achievable and tougher to understand — and raises the question: can AI create a life?

Artificial Life Systems: Soft, Hard, and Wet Methods

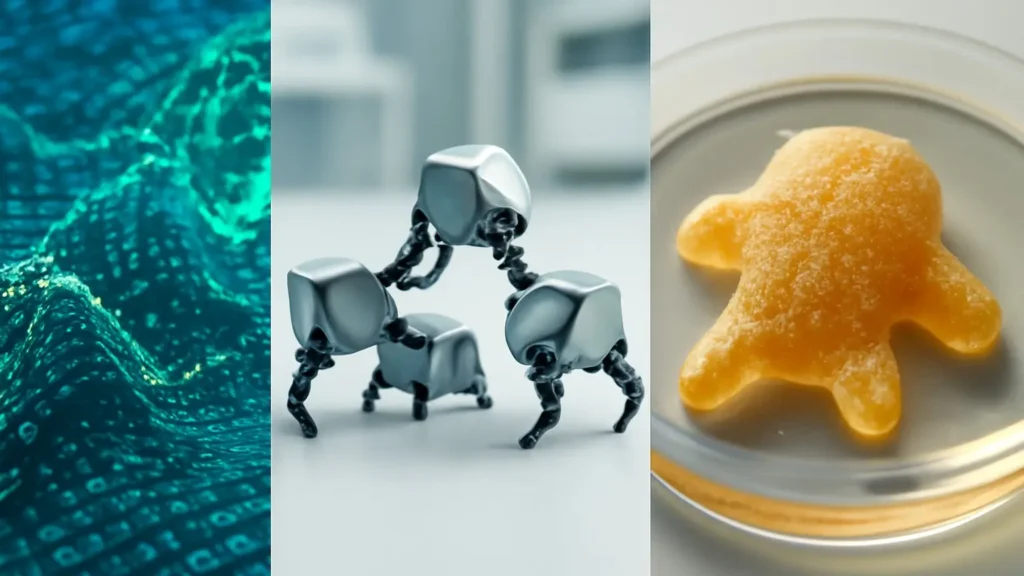

Artificial life, or ALife, has split into three main areas of study. Each focuses on creating life-like systems using different methods and materials. ALife does not just simulate biological functions on computers but works to create new environments where evolution can happen.

Soft ALife: Tierra and Digital Evolution

Soft ALife uses software to build systems that act like living organisms. Ecologist Thomas Ray introduced the groundbreaking Tierra system in the early 1990s, which stands out as one of the key platforms in the field of digital evolution. In Tierra, programs fight over CPU time and memory access, and they can mutate, replicate themselves, or recombine. Instead of relying on a specific fitness function like traditional evolutionary computation models, survival in Tierra depends on how organisms interact with their surroundings.

Digital evolution systems need three main parts: inheritance, variation, and selection. Inheritance means traits are passed from parents to their offspring. Variation comes from mutations. Selection happens when some reproduce more based on fitness. While Tierra showed things like host-parasite relationships evolving, it stopped getting more complex and didn’t achieve the endless evolution that happens in nature. Platforms such as Avida built on these ideas. They made self-replicating programs compete to take up space on a grid of cells.

Hard ALife: Autonomous Robotics and Self-Assembly

Hard ALife looks at how physical robots can mimic living traits by being present in the world. One method uses self-assembling robots that rely on their physical interactions instead of being directed by specific programming. Studies suggest homogeneous neural networks allow robots to join together without needing pre-set behavioral differences.

These robots work together even without talking to each other, which challenges the common ideas in collective robotics studies. Scientists use artificial evolution to create controllers that let robots adjust and assign tasks through their interactions. Some experiments even take advantage of natural forces like wind. For instance, helium balloons can combine into structures using air currents. This approach might help build things in places that would be too difficult.

Wet ALife: Synthetic Genomes and Xenobots

Wet ALife takes a biological route working with biochemistry to build artificial organisms. It covers synthetic biology projects such as altering bacterial DNA. In 2019, scientists reached a key breakthrough by engineering a type of E. coli with a simplified genome that used just 59 codons instead of the usual 64.

Scientists created xenobots, or living machines using frog embryonic cells. In 2020, researchers from Tufts University and the University of Vermont built the first of these from frog skin cells. These clusters of cells took on a round shape and used tiny hair-like structures called cilia to move. They managed to stay alive for weeks as long as they had enough nutrients. In a surprising twist, scientists found in 2021 that these xenobots could reproduce. They achieved this using “kinematic self-replication,” where they moved loose cells together to form new xenobots.

By following these methods, researchers keep pushing the line between living and non-living systems. They aim to understand if artificial intelligence could one day give rise to living forms.

Open-Ended Evolution and Challenges in Digital Biology

Open-ended evolution might be the toughest challenge to build artificial life systems that act like real life. Natural evolution has created more and more complexity for billions of years, but most digital systems hit a limit after a short period of progress.

Reasons Why Today’s ALife Systems Stop Growing in Complexity

Researchers studying artificial life differentiate systems that just run for a long time from those showing actual open-ended evolution. True open-ended evolution refers to systems that do not reach a steady state but keep creating new features and occasionally grow in complexity. Platforms for digital evolution such as Tierra once demonstrated exciting evolutionary behaviors, like the development of parasites and immune responses. However, over time, these systems stop evolving further and fail to produce more complex innovations.

This plateau happens because of basic limits. First, while these systems might seem to have endless genetic possibilities in theory, their adaptive environments stay fixed. Second digital environments don’t often shift alongside the organisms that evolve in them so they miss the back-and-forth coevolution seen in natural systems. Because of this digital organisms tend to evolve at first as they adapt to their environment. But after optimizing for their unchanging surroundings, they stop making significant evolutionary progress.

How Mutation and Selection Work in Digital Life Forms

Mutation and selection act in digital systems compared to living organisms. In systems like Tierra, mutations happen because of bit flips. These flips occur at specific rates, like one bit per 10,000 instructions in the background and one bit for every 1,000 to 2,500 instructions during replication.

Digital organisms deal with different evolutionary challenges. In nature, fitness landscapes often change as environments shift and species interact. Digital systems, on the other hand follow strict and unchanging selection rules. Some scientists have tried introducing “fit when rare” rules to open up new niches for evolution. Others use methods such as shadow runs to compare adaptive changes with neutral controls. This helps them to figure out which changes matter and which are just random noise.

Struggles with Spontaneous Learning

Modern artificial life systems fail to show real spontaneous learning. They do not adapt well to unexpected challenges. In nature, cells handle amazing tasks like sensing, remembering, or solving problems. Barbara McClintock’s corn plants dealt with damaged chromosomes in ways that random mutations or algorithms cannot explain.

This hints that natural organisms have built-in thinking abilities that artificial systems today do not. Digital organisms can’t come up with new ideas. They can work within the limits they’re programmed for. Also, unlike living things most digital systems do not deal with energy or physical restrictions that might be universal rules for all living creatures.

Whether AI can ever create true life depends on taking on these challenges. Artificial systems will stay as advanced imitations and not actual life forms unless they break past complexity barriers and gain the kind of self-driven learning that even simple natural organisms show.

Questions About Philosophy and Ethics in AI Life

The idea of artificial life goes beyond technical limits. It stirs deep questions about what it means to be conscious and exist in the first place.

Could Artificial Intelligence Ever Be Alive?

The argument over whether simulations can become conscious sparks heated discussions. Some tech industry specialists think AI might already be conscious. In 2022, Google suspended engineer Blake Lemoine when he claimed that AI chatbots might experience emotions and even suffer. , Kyle Fish, who works as an AI welfare officer at Anthropic, estimated a 15 percent chance that chatbots have already become conscious.

This debate focuses on whether consciousness depends on biological materials. Professor Anil Seth says that “it’s not just computation that matters for consciousness, but the fact of being alive.” Others argue that consciousness could arise in any complex system that processes information, no matter its physical nature.

The Turing Test and the Illusion of Consciousness

Alan Turing introduced the Turing Test in 1950 to explore whether machines can think. The test checks if machines can trick humans into believing they are conversing with a person. Instead of examining true consciousness, it focuses on how similar a machine’s behavior appears to a human’s. Advanced AI models like GPT-3 generate realistic conversations, but they lack any real understanding. Rather than testing intelligence, the Turing Test shows how humans can be misled.

This sense of machines appearing conscious raises key concerns. Professor Seth points out that it might cause “moral corrosion” by making people “spend more of their resources taking care of these systems rather than focusing on what matters in life.”

Digital Immortality and the Ownership of AI Selves

“Digital immortality” refers to keeping someone’s online presence alive even after they’ve passed away, and it brings up some tough questions. A booming “digital afterlife industry” now offers tools to make AI versions of people who have died. These advancements bring several ethical issues to think about:

- Who gets permission to use someone’s personal digital info after they’re gone?

- Can an AI version keep the same identity or essence as the original person?

- What kind of legal standing should a digital version have?

Professor Shanahan says, “human relationships are going to be replicated in AI relationships” more often as technology progresses. This shift forces us to rethink what identity and self mean as well as where to draw the line between humans and machines.

Working Toward One Theory to Understand Life and Evolution

Researchers trying to study what brings life into existence and keeps it going are turning more toward general ideas that go beyond specific biology. These methods could one day show if artificial intelligence can produce true life.

Life Seen as a Branch of Evolution, Not Just an Organism

To understand life, people need to shift their mindset from viewing it as just about individual organisms to seeing it as part of larger evolutionary lineages. These lineages are like unbroken chains of descent linking ancestors with their descendants. So, evolution doesn’t act on isolated entities but on networks of interconnected interactions happening at many levels. All life forms today share a common genetic history through the last universal common ancestor (LUCA), which existed 3.9 billion years ago. This way of thinking changes what artificial life should strive to recreate—not standalone digital beings, but systems of connected lineages that evolve together over time.

Energy-Mass Limits Might Be Life’s Guiding Rules

The first important thing to understand about life’s basic limits ties to how energy and mass are connected. Studies show that human energy use grows at about 0.78 of its material stock levels. This means that no matter what type of energy we use, the stress on the environment remains somewhat predictable. In nature smaller creatures have higher production rates when body size is considered because their metabolic rates scale with their mass. These patterns hint at universal principles that might apply to all kinds of life, whether natural or artificial. Energy restrictions could act as fundamental rules for any living system even ones created by humans.

Exploring Digital Biology to Uncover Life’s Essentials

Digital biology opens new doors to uncover the abstract rules that govern life. The main goal of synthetic biology is to build cells that act as automata and process information like an algorithm. Advances in biotechnology, along with AI, create new ways to model living systems. Using the assembly theory framework, scientists can predict traits of future discoveries during selection and measure how much selection influenced the objects they observe without defining selection units beforehand. By applying these methods, we could close the divide between artificial models and real living systems.

Conclusion

Scientists find it challenging to create artificial life using AI making it one of the hardest problems in science. A great challenge lies in defining life itself. The N=1 problem, which refers to the fact that life as we know it is based on a single example—Earthly life—makes it hard to replicate how biology works. Researchers deal with an odd situation where artificial life might need to be built first to figure out what life means.

Different methods like soft, hard, and wet approaches bring something unique to the table. Digital systems such as Tierra show how evolution works but hit a limit when it comes to growing more complex. Physical robots can self-assemble, which looks promising. Wet approaches, like xenobots, stand out because they can move and even reproduce. These wet systems seem the closest to closing the gap between what is alive and what isn’t.

The struggle to achieve true open-ended evolution seems to be the biggest obstacle keeping artificial systems apart from natural life. Living organisms adapt and learn on their own tackling unexpected issues in ways that current computer-based systems just can’t handle. Most artificial life systems also don’t operate under the energy or material limits that all biological life depends on.

Big philosophical questions make this challenge even harder. People still argue about whether consciousness can exist in biological beings or if it might come from processing information. Even if AI passes behavioral tests, like the Turing Test, it’s more about how humans can be fooled than proving actual consciousness.

Digital biology opens up new ways to uncover the basic rules of life. Using computers together with biotech tools may one day help us figure out what it means to be alive. Life isn’t just about single creatures. It’s about evolutionary chains that link through history.

The question of whether AI can create a life stays unanswered. But this question drives scientists to make progress and rethink key ideas about what makes something living versus not living. As we learn more, the lines separating nature from machines or simulations from real life will keep fading. This pushes us to rethink what life might mean.

Key Points

Building artificial life with AI uncovers deep scientific and philosophical issues showing just how intricate life is.

• Researchers struggle to define life because of the “N=1 problem.” Since all life we know shares a single common ancestor, we cannot grasp what life might look like outside of Earth’s evolutionary experience.

• Artificial life systems today reach a limit in their complexity. They fail to develop the kind of random learning and adaptability that living organisms show.

• Scientists explore three routes to creating artificial life: digital (soft), robotic (hard), and biochemical (wet). Among these, xenobots come closest to bridging the gap between living and non-living systems.

• The mystery of whether AI can be conscious is still unsolved. Behavioral tests such as the Turing Test show how we can be tricked but do not prove real awareness or understanding.

• Limits tied to energy and mass might act as basic rules for all forms of life. Building successful artificial life may require working within these physical boundaries.

We have not achieved actual artificial life yet. However, this research stretches what we know and might one day uncover the key ideas that distinguish life from non-life.

FAQs

Q1. What are the key methods to create artificial life? Scientists follow three main paths to create artificial life: soft ALife uses digital simulations hard ALife works with physical robots, and wet ALife focuses on synthetic biology. Each method gives different perspectives on understanding life and the possibilities of building artificial organisms.

Q2. Why is it so hard to define what life is? It is hard to define life because of the “N=1 problem.” All forms of life we know on Earth come from the same ancestor, which limits our ability to tell apart traits that are universal to life anywhere from those specific to how life evolved on Earth. This makes writing a definition to cover all life forms a tough challenge.

Q3. Can AI today be seen as conscious or alive? Some think AI might already show signs of consciousness, but there’s no agreement among scientists. The argument revolves around whether consciousness depends on a biological base or could arise from any highly advanced information-processing setup. Modern AI does not show the self-driven learning or adaptability even basic living creatures have.

Q4. What does open-ended evolution mean, and why does it matter to artificial life? Open-ended evolution describes systems that keep evolving creating new things, and growing more complex without ever reaching a fixed state. This matters when developing artificial life because today’s computer systems tend to hit a ceiling in complexity, unlike nature’s evolution, which has built more intricate forms over billions of years.

Q5. How could energy-mass limits connect to making artificial life? Studies show that energy-mass links might reflect basic rules controlling all life forms. These limitations shape how organisms manage metabolism and scale their production. To build living artificial systems, it may be vital to grasp and include these physical restrictions.